For anyone wanting to contribute but on a smaller and more feasible scale, you can help distribute their database using torrents.

I know the last time this came up there was a lot of user resistance to the torrent scheme. I’d be willing to seed 200-500gb but having minimum torrent archive sizes of like 1.5TB and larger really limits the number of people willing to give up that storage, as well as defeats a lot of the resiliency of torrents with how bloody long it takes to get a complete copy. I know that 1.5TB takes a massive chunk out of my already pretty full NAS, and I passed on seeding the first time for that reason.

It feels like they didn’t really subdivide the database as much as they should have…

There are plenty of small torrents. Use the torrent generator and tell the script how much space you have and it will give you the “best” (least seeded) torrents whose sum is the size you give it. It doesn’t have to be big, even a few GB is suitable for some smaller torrents.

Almost all the small torrents that I see pop up are already seeded relatively good (~10 seeders) though, which reinforces the fact that A. the torrents most desperately needing seeders are the older, largest ones and B. large torrents don’t attract seeders because of unreasonable space requirements.

Admittedly, newer torrents seem to be split into 300gb or less pieces, which is good, but there’s still a lot of monster torrents in that list.

Thx.

Do you know how useful it is to host such a torrent? Who is accessing the content via that torrent?

Anyone who wants to. I think a lot of LLM trainers access them.

Doesn’t sound like I should host some of it. I’d be more down to host it for endusers

deleted by creator

The selection is literally all books that can be found on the internet.

So how big is that?

According to their total dataset size excluding duplicates, over 900 TB

Sure, that’s a bit more than $65.000 per year with Backblaze.

Shit, my synology has more than that… alas, it is full of movie “archives”

You run a petabyte Synology at home?

Well, it’s not just a single synology, it’s got a bunch of expansion units, and there are multiple host machines.

I’m guessing you’re talking GBs?

Nope.

That’s awesome - how many drives and of what sizes do you have? Also why synology instead of higher enterprise grade solution at this point?

They put a link in with the total…

Total Excluding duplicates 133,708,037 files 913.1 TB

deleted by creator

It’s an investment. It’s like the price of a small car. But it was built over time, so not like one lump sum.

Originally, it was to have easier access to my already insane Blu-ray collection. But I started getting discs from Redbox, rental stores, libraries, etc. they are full rip, not that compressed PB stuff. Now there are like 3000 movies and fuck knows how many tv shows.

A lot of my effort was to have the best release available. Or, have things that got canceled. Like the Simpsons episode with MJ, which is unavailable to stream.

Snags… well, synology is sooo easy. Once you figure out how you want you drives set up, there’s nothing to it.

Whatever you do, always have redundant drives. Yes, you lose space, but eventually one of them is gonna die and you don’t want to lose data.

You should write a will instructing your family to send those disks to the internet archive for preservation if something happened to you.

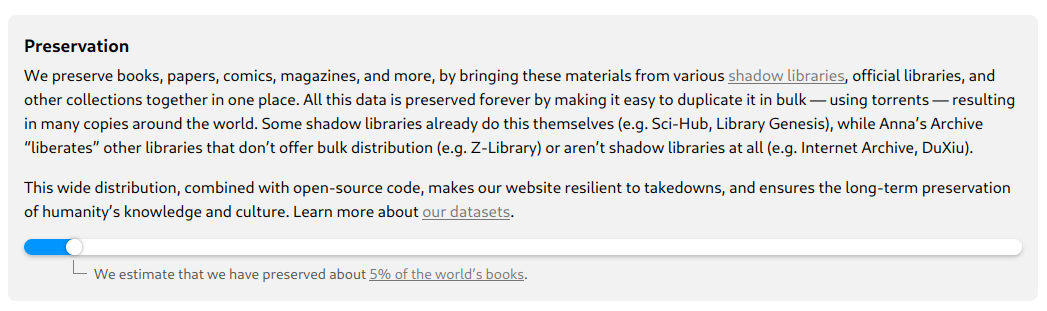

Correct me if I’m wrong, but they only index shadow libraries and do not host any files themselves (unless you count the torrents). So, you don’t need 900+ TB of storage to create a mirror.

I imagine a couple of terabytes at the very least, though, I could be underestimating how many books have got deDRMed so far.

Apparently it’s 900TB

Girl, what? No wonder they’re having trouble hosting their archive. Does Anna’s Archive host copyrighted content as well or is all that copyleft?

They host academic papers and books, most of them are copyrighted contents. They recently got in trouble for scraping a book metadata service to generate a list of books that hasn’t been archived yet: https://torrentfreak.com/lawsuit-accuses-annas-archive-of-hacking-worldcat-stealing-2-2-tb-data-240207/

They index, not host, no? (Unless you count the torrents, which are distributed)

Is hosting all that stuff even legal? I mean, they’re not making any money off of it, but they’re still a “piracy” hub. How have they survived this long?

The archive includes copyrighted works. Often multiple copies of each work, across different formats.

I guess more than 5?

bigger than zlib or project Gutenberg?

It is huge! They claimed to have preserved about 5% of the world’s books.

deleted by creator

Could anyone broad-stroke the security requirements for something like this? Looks like they’ll pay for hosting up to a certain amount, and between that and a pipeline to keep the mirror updated I’d think it wouldn’t be tough to get one up and running.

Just looking for theory - what are the logistics behind keeping a mirror like this secure?

Could be worth asking on selfhosted (how do I link a sub on lemmy ?) They probably have more relevant experience at this sort of thing.

Edit

Does this work ?

!selfhosted@lemmy.world might work for more people.

Is probably more suitable. I’d be interested in the total size, though.

900 TB, according to other comments here.

Is it all or nothing sort of deal?

There are partial torrents, also according to the other comments.

It does. 😉

They outline it pretty well here:

This is a fascinating read

Also link any ways to donate if they’re accepting that.

I had no idea about this project. Is it like a better search engine for libgen etc?

It searches through libgens, z-library and has it’s own mirrors of the files they serve on top of that. I think it was created as a response to Z-Library’s domain getting seized but I could be wrong.

It has way more content than Libgen