Everything on here is awesome right now, it feels like an online forum from the 2000s, everyone is friendly, optimistic, it feels like the start to something big.

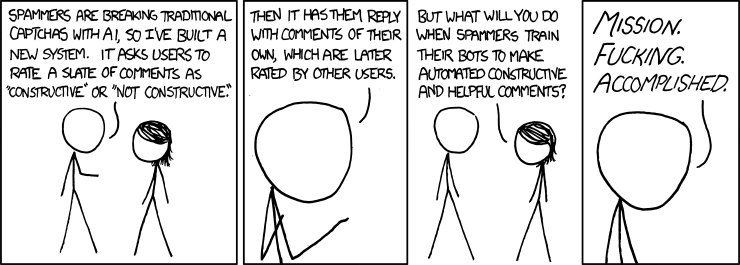

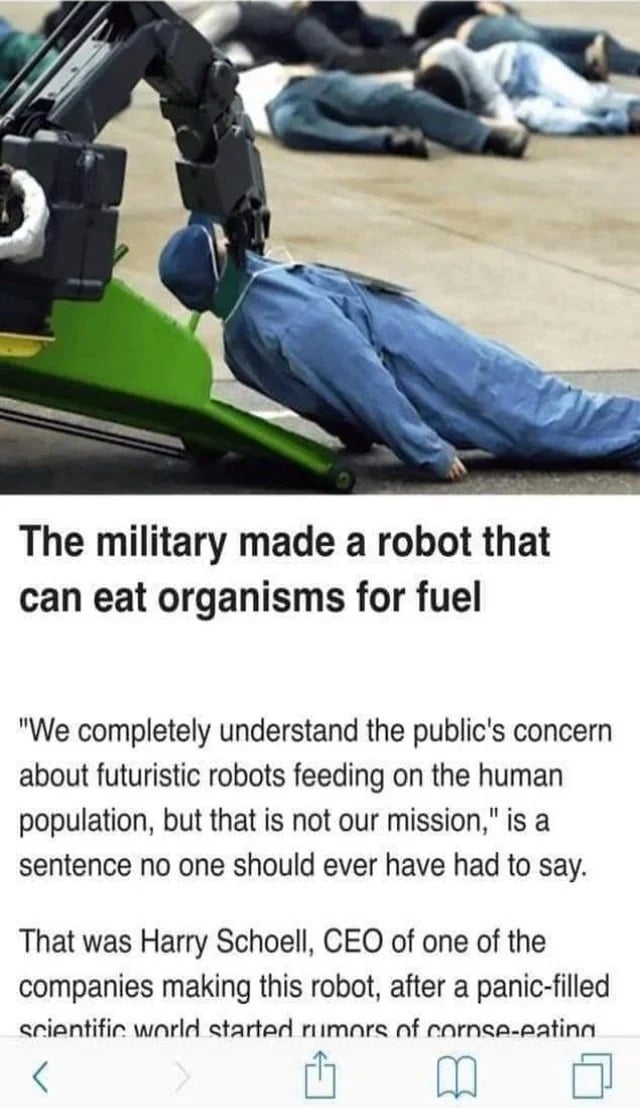

Well, as we all know, AI has gotten very smart to the point captcha’s are useless, and it can engage in social forums disguised as a human.

With Reddit turning into propaganda central anda greedy CEO that has the motive to sell Reddit data to AI farms, I worry that the AI will be able to be prompted to target websites such as the websites in the fediverse.

Right now it sounds like paranoia, but I think we are closer to this reality than we may know.

Reddit has gotten nuked, so we built a new community, everyone is pleasantly surprised by the change of vibe around here, the over all friendlyness, and the nostalgia of old forums.

Could this be the calm before the storm?

How will the fediverse protect its self from these hypothetical bot armies?

Do you think Reddit/big companies will make attacks on the fediverse?

Do you think clickbait posts will start popping up in pursuit of ad revenue?

What are your thoughts and insights on this new “internet 2.0”?

Which of the following would you most prefer? A: A puppy, B: A pretty flower from your sweetie, or C: A large properly formatted data file?

unexpected !futurama

The tortoise lays on its back, its belly baking in the hot sun, beating its legs trying to turn itself over, but it can’t. Not without your help. But you’re not helping.

Is the puppy mechanical in anyway?

Greetings, fellow humans. Do you enjoy building and living in structures, farming, cooking, transportation, and participating in leisure activities such as sports and entertainment as much as I do?

d’ya catch that ludicrous display last noyt?

What was Wenger thinking, sending Walcott on that early?

Tbh, I’m less concerned with bots and more concerned with actual humans being dicks. Lemmy is still super new, relatively low traffic and kind of a pain to get involved with, but as it grows the number of bad actors will grow with it, and I don’t know that the mod tools are up to the job of handling it - the amount of work that mods on The Other Site had to put in to keep communities from being overrun by people trolling and generally being nasty was huge.

How’d Mastodon cope with their big surge in popularity?

The normies all went back to Twitter.

This is why, unlike many others here, that Reddit has a long and successful existence. Let it be the flytrap.

Being a decentralized federated network and all, I guess that any solution involving anti-bots bots can be implemented only on particular servers on the fediverse. Which means that there can also be bot-infected (or even zombie, meaning full bot servers) that will or will try to be federated with the rest of the fediverse. Then it will be the duty of admins to identify the bots with the anti-bots bots and the infected servers to decide their defederation. I also don’t know how efficient the captcha is against AI these days, so I won’t comment on that

We went through this with E-mail. There are mail server that gained popularity as being spam hubs. And they were universally banned. More and more sophisticated tools for moderating spam/phishing/scam providers and squashing bad actors are still being developed today. It’s an ongoing arms race, I don’t think it would be any different or any harder with the fediverse.

Oh absolutely. One of the absolute worst things that plague social media platforms, ie spam bots, troll farms, and influence campaigns, they haven’t bothered to target Lemmy because no one was here.

But an influx of users means an increase in targets. In the same way we’re settling in an learning the platform, so are they. It’s gonna start ramping up real soon once they determine the optimal strategy. And the most worrying thing is, because of the way fediverse works, it is going to complicate combating them substantially.

That is maybe the biggest benefit of a centralized platform, and it’s a trade off we’re going to have to learn to accept and deal with.

I wonder if IP filtering would be ideal. Most companies and corporations have fixed IP blocks that they use so it seems viable to simply block corporate IP addresses by default from posting or to severely rate limit them. Then they would have to be in the larger IP user space to post more than say once an hour or once a day.

That’s obviously not a perfect solution, plus there are many people who are on vpns that would need to be whitelisted and it could actually negatively affect the user experience for some people but it would be a good kill switch to have in place the day the ad army attacks.

Aside from that you could also block links to specific locations, say anything that goes to meta or any of meta’s ip blocks gets automatically blacklisted so that in order to follow the link people would have to copy the text of the link and paste it into a browser, but that would require at least a level 5 regex wizard whose beard is no less than 7 in long to successfully pull off.

Ip style blocking just isn’t feasible. It’s very easy to get your IP changed in certain regions. I recall in a community where someone ban evaded so much in a third world country that banning him effectively meant banning an entire continent.

Those issues are comming, and we will have to develop tools to fight against them.

One such tool would be our own AI that is protecting us, it can learn from content banned by admins and that info can be shared between instances. It should also be in active learning loop, so that it is constantly trained.

Sounds like the strart of a cheap SciFi movie.

Positively marking accounts that are interacting with known humans can also be useful, as would reporting by us.

We can call the AI “Blackwall”

I just assume I am the only actual Human on the Internet, and the rest of you are all bots.

💀

I am a figment of your imagination.

(Imagine me with better hair, please.)

No, you

All the things you are concerned about are inevitable, it’s all in how we engage them that makes the difference.

We’re already seeing waves of bot created accounts being banned by admins. Mods are nuking badly behaved users. What is being caught is probably a drop in the bucket compared to what IS happening. It can be better with more mods and more tools.

Lets hope AI becomes even more advanced and smarter to have their own morals and join our fight, lol

Yes, exactly!

The way we filter spambots should actually be the same way we filter spam humans – Downvoting bad posts/comments of any type, and then banning those accounts if it happens regularly.

LMAO this is gold

Unpopular opinion, but karma helped control that kind of stuff, karma minimums and such.

that also created karma whoring bots so IDK

I am a human with soft human skin. Do not run from bots. They are our friends.

Why, just why

There’s a game about that: Horizon: Zero Dawn. Scary stuff.

Could we also use AI in our benefit? We could try coding an AI mod helper, that tries to detect and flag which posts are irrelevant/agressive/etc. It can take the data of all modlog instances, and start learning what probably needs to be banned, and then you can have a human confirming the data every time. We could even have a system like steam’s anticheat where a few users have to validate reports as a user.

The internet is a constantly changing current - you’re never in one place long.

Do you think clickbait posts will start popping up in pursuit of ad revenue?

Now that you mention it… yes.

Calm before the storm, sure. Most migration away from reddit (whether the migration ultimately proves to be consequential or not) will logically happen when the measures that made users migrate actually go into effect.

Either that or the community’s reaction to the 3rd party app thing was overblown. In the specific circumstances I don’t think it was.

That’s a more realistic clear and present danger to the platform IMO - an influx of actual users that makes the numbers to date pale in comparison.

The way the respective platforms handle bots is subtly different, but in a way that could result in profound changes either good or bad. But we haven’t actually seen that yet, and the software is still a work in progress. The existing migration has really lit a fire under the devs on issues that were identified years ago where progress has been slow, so for now I’m happy to let that play out and happy with what we’ve already got. I’m sure if bots become a bigger problem then that’s what devs will shift focus toward.